I was attempting to move a VM from one host to another, and received the fallowing error: “Host does not support the virtual hardware configuration of virtual machine. The IO Filters(s) XXXX configured on the VM’s disk are not installed on the destination host.“

At one point, I was using a VM accelerator solution that was not cleanly removed. It took me awhile to figure out how to remove the IO filter from the VM, so hopefully this guide will save you some time.

Part 1 – Remove setting from the VM

After searching the config files of the VM, I came across the VM’s VMDK descriptor file. This is not the storage VMDK file itself, but the 1KB sized descriptor file which I had to edit.

There are two lines that contain configurations for the IO filter, and both need to be removed. These are the ddb.iofilters and ddb.sidecars settings. Both lines can just be removed and the file saved.

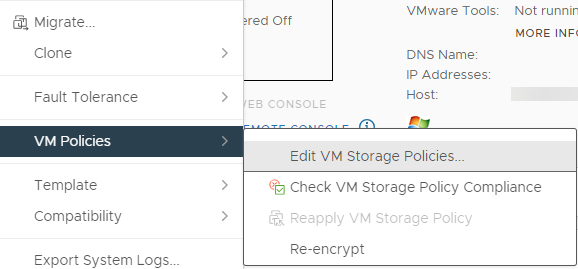

Upon trying to migrate the VM after removing these lines, I received the same error as before. I needed to make the host aware of these changes somehow. This was achieved by right-clicking the VM –> VM Policies –> Edit VM Storage Policies..

I didn’t have to change anything, but just needed to click OK.

After doing those tasks, I was able to successfully migrate the VM!

Part 2 – Remove setting from the Host

Although I probably could have done this first, I was in a hurry and didn’t want to impact production VMs. The process to remove the IO filter from the host is fairly quick and easy, but will require the host to be in maintenance mode, and a reboot is probably useful afterwards.

Put the host into maintenance mode.

SSH into the host.

Run “esxcli software vib list” to view a list of all installed filters.

Run “esxcli software vib remove -n filtername” (replacing filter name) to remove the filter.

While a reboot isnt required, it is suggested.