In the beginning there was Fibre Channel (FC), and it was good. If you wanted a true SAN — versus shared direct-attached SCSI storage — FC is what you got. But FC was terribly expensive, requiring dedicated switches and host bus adapters, and it was difficult to support in geographically distributed environments. Then, around six or seven years ago, iSCSI hit the SMB market in a big way and slowly began its climb into the enterprise.

The intervening time has seen a lot of ill-informed wrangling about which one is better. Sometimes, the iSCSI-vs.-FC debate has reached the level of a religious war.

This battle been a result of two main factors: First, the storage market was split between big incumbent storage vendors who had made a heavy investment in FC marketing against younger vendors with low-cost, iSCSI-only offerings. Second, admins tend to like what they know and distrust what they don’t. If you’ve run FC SANs for years, you are likely to believe that iSCSI is a slow, unreliable architecture and would sooner die than run a critical service on it. If you’ve run iSCSI SANs, you probably think FC SANs are massively expensive and a bear to set up and manage. Neither is entirely true.

Now that we’re about a year down the pike after the ratification of the FCoE (FC over Ethernet) standard, things aren’t much better. Many buyers still don’t understand the differences between the iSCSI and Fiber Channel standards. Though the topic could easily fill a book, here’s a quick rundown.

The fundamentals of FC

FC is a dedicated storage networking architecture that was standardized in 1994. Today, it is generally implemented with dedicated HBAs (host bus adapters) and switches — which is the main reason FC is considered more expensive than other storage networking technologies.

As for performance, it’s hard to beat the low latency and high throughput of FC, because FC was built from the ground up to handle storage traffic. The processing cycles required to generate and interpret FCP (Fibre Channel protocol) frames are offloaded entirely to dedicated low-latency HBAs. This frees the server’s CPU to handle applications rather than talk to storage

FC is available in 1Gbps, 2Gbps, 4Gbps, 8Gbps, 10Gbps, and 20Gbps speeds. Switches and devices that support 1Gbps, 2Gbps, 4Gbps, and 8Gbps speeds are generally backward compatible with their slower brethren, while the 10Gbps and 20Gbps devices are not, due to the fact that they use a different frame encoding mechanism (these two are generally used for interswitch links).

In addition, FCP is also optimized to handle storage traffic. Unlike protocols that run on top of TCP/IP, FCP is a significantly thinner, single-purpose protocol that generally results in a lower switching latency. It also includes a built-in flow control mechanism that ensures data isn’t sent to a device (either storage or server) that isn’t ready to accept it. In my experience, you can’t achieve the same low interconnect latency with any other storage protocol in existence today.

Yet FC and FCP have drawbacks — and not just high cost. One is that supporting storage interconnectivity over long distances can be expensive. If you want to configure replication to a secondary array at a remote site, either you’re lucky enough to afford dark fiber (if it’s available) or you’ll need to purchase expensive FCIP distance gateways.

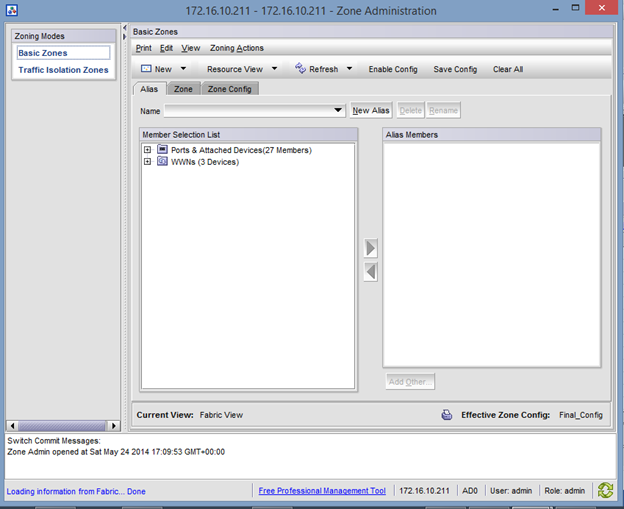

In addition, managing a FC infrastructure requires a specialized skill set, which may make administrator experience an issue. For example, FC zoning makes heavy use of long hexadecimal World Wide Node and Port names (similar to MAC addresses in Ethernet), which can be a pain to manage if frequent changes are made to the fabric.

The nitty-gritty on iSCSI

iSCSI is a storage networking protocol built on top of the TCP/IP networking protocol. Ratified as a standard in 2004, iSCSI’s greatest claim to fame is that it runs over the same network equipment that run the rest of the enterprise network. It does not specifically require any extra hardware, which makes it comparatively inexpensive to implement.

From a performance perspective, iSCSI lags behind FC/FCP. But when iSCSI is implemented properly, the difference boils down to a few milliseconds of additional latency due to the overhead required to encapsulate SCSI commands within the general-purpose TCP/IP networking protocol. This can make a huge difference for extremely high transactional I/O loads and is the source of most claims that iSCSI is unfit for use in the enterprise. Such workloads are rare outside of the Fortune 500, however, so in most cases the performance delta is much narrower.

iSCSI also places a larger load on the CPU of the server. Though hardware iSCSI HBAs do exist, most iSCSI implementations use a software initiator — essentially loading the server’s processor with the task of creating, sending, and interpreting storage commands. This also has been used as an effective argument against iSCSI. However, given the fact that servers today often ship with significantly more CPU resources than most applications can hope to use, the cases where this makes any kind of substantive difference are few and far between.

iSCSI can hold its own with FC in terms of throughput through the use of multiple 1Gbps Ethernet or 10Gbps Ethernet links. It also benefits from being TCP/IP in that it can be used over great distances through existing WAN links. This usage scenario is usually limited to SAN-to-SAN replication, but is significantly easier and less expensive to implement than FC-only alternatives.

Aside from savings through reduced infrastructural costs, many enterprises find iSCSI much easier to deploy. Much of the skill set required to implement iSCSI overlaps with that of general network operation. This makes iSCSI extremely attractive to smaller enterprises with limited IT staffing and largely explains its popularity in that segment.

This ease of deployment is a double-edged sword. Because iSCSI is easy to implement, it is also easy to implement incorrectly. Failure to implement using dedicated network interfaces, to ensure support for switching features such as flow control and jumbo framing, and to implement multipath I/O are common mistakes which can result in lackluster performance. Stories abound on Internet forums of unsuccessful iSCSI deployments that could have been avoided because of these factors.

Fiber Channel over IP

FCoIP (Fiber Channel over Internet Protocol) is a niche protocol that was ratified in 2004. It is a standard for encapsulating FCP frames within TCP/IP packets so that they can be shipped over a TCP/IP network. It is almost exclusively used for bridging FC fabrics at multiple sites to enable SAN-to-SAN replication and backup over long distances.

Due to the inefficiency of fragmenting large FC frames into multiple TCP/IP packets (WAN circuits typically don’t support packets over 1,500 bytes), it is not built to be low latency. Instead, it is built to allow geographically separated Fibre Channel fabrics to be linked when dark fiber isn’t available to do so with native FCP. FCIP is almost always found in FC distance gateways — essentially FC/FCP-to-FCIP bridges — and is rarely if ever used natively by storage devices as a server to storage access method.

Fibre Channel over Ethernet

FCoE (Fibre Channel over Ethernet) is the newest storage networking protocol of the bunch. Ratified as a standard in June of last year, FCoE is the Fibre Channel community’s answer to the benefits of iSCSI. Like iSCSI, FCoE uses standard multipurpose Ethernet networks to connect servers with storage. Unlike iSCSI, it does not run over TCP/IP — it is its own Ethernet protocol occupying a space next to IP in the OSI model.

This differential is important to understand as it has both good and bad results. The good is that, even though FCoE runs over the same general-purpose switches that iSCSI does, it experiences significantly lower end-to-end latency due to the fact that the TCP/IP header doesn’t need to be created and interpreted. The bad is that it cannot be routed over a TCP/IP WAN. Like FC, FCoE can only run over a local network and requires a bridge to connect to a remote fabric.

On the server side, most FCoE implementations make use of 10Gbps Ethernet FCoE CNAs (Converged Network Adapters), which can both act as network adapters and FCoE HBAs — offloading the work of talking to storage similar to the way that FC HBAs do. This is an important point as the requirement for a separate FC HBA was often a good reason to avoid FC altogether. As time goes on, servers may commonly ship with FCoE-capable CNAs built in, essentially removing this as a cost factor entirely.

FCoE’s primary benefits can be realized when it is implemented as an extension of a pre-existing Fiber Channel network. Despite having a different physical transport mechanism, which requires a few extra steps to implement, FCoE can use the same management tools as FC, and much of the experience gained in operating an FC fabric can be applied to its configuration and maintenance.

Putting it all together

There’s no doubt that the debate between FC and iSCSI will continue to rage. Both architectures are great for certain tasks. However, saying that FC is good for enterprise while iSCSI is good for SMB is no longer an acceptable answer. The availability of FCoE goes a long way toward eating into iSCSI’s cost and convergence argument while the increasing prevalence of 10Gbps Ethernet and increasing server CPU performance eats into FC’s performance argument.

Whatever technology you decide to implement for your organization, try not to get sucked into the religious war and do your homework before you buy. You may be surprised by what you find.

If you found this article to be helpful, please support us by visiting our sponsors’ websites.